I. Introduction: Meta Reimagines Smart Eyewear, Again

At its landmark Connect 2025 event, Meta didn’t just unveil a new gadget; it presented its most compelling vision yet for the future of personal computing. CEO Mark Zuckerberg showcased a product lineup that represents a significant leap forward in the quest to merge our physical and digital realities, moving smart glasses from the realm of niche technology toward a mainstream-ready consumer electronic. This launch wasn’t merely an update; it was a strategic realignment of Meta’s entire approach to wearable AI.

The company has now clearly defined a three-pronged strategy for its smart eyewear, establishing distinct product categories to cater to a wide spectrum of users. This new, segmented lineup includes:

- Display AI Glasses: The groundbreaking flagship product, the Meta Ray-Ban Display, which introduces a visual interface and a revolutionary control system.

- Camera AI Glasses: An upgraded version of its popular seller, the Ray-Ban Meta (Gen 2), alongside the stylish Oakley Meta HSTN.

- Specialized AI Glasses: A new category targeting athletes, the fitness-centric Oakley Meta Vanguard.

This multi-tiered approach is a sophisticated strategy aimed at normalizing the presence of smart glasses in society. After validating the market with over 2 million units of the first generation sold by early 2025, Meta is now diversifying its offerings to accelerate adoption. The affordable Ray-Ban Meta (Gen 2) at $379 provides an accessible entry point for the curious lifestyle consumer. The Oakley Meta Vanguard at $499 targets the high-value athletic niche with specialized integrations like Garmin and Strava. Finally, the $799 Meta Ray-Ban Display serves as the aspirational “halo” product, pushing technological boundaries and defining the category’s future. By creating these distinct entry points, Meta increases the visibility and social acceptance of camera-enabled eyewear across various contexts, directly addressing the social awkwardness that famously hindered early pioneers like Google Glass.

This report will serve as the definitive guide to the star of the show: the Meta Ray-Ban Display. We will unpack its revolutionary technology, explore its real-world applications, place it within the broader 2025 lineup, and analyze its profound implications for the future of human-computer interaction.

II. The Main Event: Unpacking the Meta Ray-Ban Display

Meta has positioned the Ray-Ban Display not as an incremental update, but as the vanguard of an entirely new product category: “Display AI Glasses”. This is the first device to successfully integrate a camera, a multi-microphone array, open-ear speakers, onboard computing, advanced AI, and a full-color visual display into a single, stylish, and comfortable form factor that people would actually want to wear.

The In-Lens Display: Your Private Window to the Digital World

The centerpiece of the new glasses is the integrated display. It is a monocular (right eye only) heads-up display (HUD), which means it projects a small, fixed screen into the user’s field of view rather than creating a fully immersive augmented reality experience. This display appears to float a couple of feet in front of the wearer, designed for quick, glanceable information.

The technical specifications of this display are formidable. It features a full-color, high-resolution 600×600 pixel LCoS display, providing a pixel density of 42 pixels per degree (ppd). This metric is crucial; it means the display is exceptionally sharp and makes small text easy to read, with a density that surpasses even high-end VR headsets like the Apple Vision Pro. With a brightness of up to 5,000 nits, the display remains visible even in direct sunlight, a feat aided by the standard inclusion of Transitions® lenses that automatically darken in bright conditions. The field of view is a modest 20 degrees, reinforcing its purpose for brief interactions rather than sustained viewing.

Perhaps the most critical design choice is the focus on privacy. The display is engineered with just 2% light leakage, making it virtually impossible for anyone nearby to see what is being displayed. This ensures that notifications, messages, and other personal information remain completely private to the wearer.

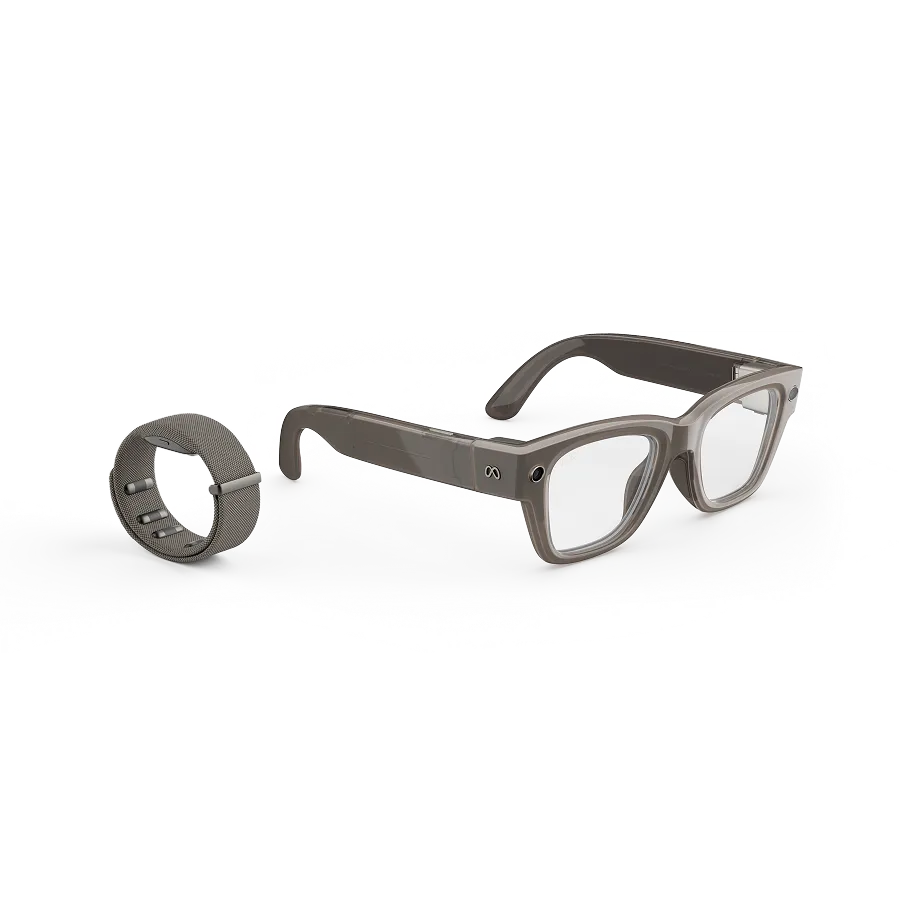

The Meta Neural Band: Rewriting Human-Computer Interaction

If the display is the main attraction, the accompanying Meta Neural Band is the revolutionary enabler. It is Meta’s elegant solution to one of the biggest challenges for smart glasses: how to control them intuitively and discreetly without resorting to awkward voice commands in public or constantly tapping the side of your head.

The band utilizes advanced electromyography (sEMG) technology. Sensors within the wristband detect the subtle electrical signals generated by your muscles as you make small finger and hand movements. These “barely perceptible movements” are then translated into commands for the glasses, creating a seamless and silent control interface. The fidelity of this system is so high that it can measure movement even before it is visually perceptible, the result of years of research involving nearly 200,000 participants.

This technology enables a new language of interaction. Users can:

- Select items by pinching their thumb and index finger together.

- Scroll through menus or notifications with a simple side-to-side swipe of the thumb.

- Adjust volume by pinching their fingers and rotating their wrist, mimicking the turning of a physical dial.

- Compose text by mimicking the act of writing on a physical surface with their finger.

The band itself is designed for all-day wear. It is constructed from Vectran, a material used for the Mars Rover’s crash pads, making it as strong as steel when pulled yet soft and flexible on the wrist. It boasts an 18-hour battery life and an IPX7 water rating, meaning it can withstand rain or handwashing. It comes in Black and Sand to match the glasses and is available in three sizes to ensure the precise fit necessary for the EMG sensors to function correctly.

While the display is the feature that grabs headlines, the Neural Band is arguably the more transformative technology. It represents Meta’s long-term strategy to solve the fundamental input problem for the future of computing. True augmented reality, as envisioned in prototypes like “Project Orion,” will require a control method far more sophisticated than voice or touch to manipulate complex 3D holographic interfaces. By bundling the Neural Band with the Display glasses, Meta is not just selling an accessory; it is seeding an ecosystem and building user muscle memory for its future AR platform. The success of this new interaction paradigm is a direct test of the viability of Meta’s entire AR roadmap.

Hardware and Design: Is It Still Fashion?

Meta and Essilor Luxottica have worked to maintain the iconic Ray-Ban aesthetic, but the demands of the new technology have necessitated design compromises. The frames of the Meta Ray-Ban Display are noticeably chunkier and, at 69 grams, are heavier than their display-less counterpart, the 52-gram Gen 2 model. This trade-off between advanced functionality and everyday comfort will be a key factor for potential buyers.

Housed within these frames is a powerful suite of hardware. The glasses feature a 12MP ultra-wide camera with a 3x digital zoom, allowing for high-quality photo and video capture. The audio system is equally impressive, with a six-microphone array for crystal-clear voice commands and calls, complemented by discreet open-ear speakers that deliver audio to the wearer without isolating them from their surroundings.

Battery life for the glasses is rated for up to six hours of mixed use. The included charging case, which features a clever collapsible design, provides up to an additional 30 hours of total battery life, ensuring the device can last through a full day and beyond.

III. A New Reality: Core Features and Daily Use Cases

The true potential of the Meta Ray-Ban Display is realized when its advanced hardware is applied to everyday situations. The technology moves beyond specifications on a page to become a powerful tool for communication, navigation, and creativity.

Visual Meta AI: Your Context-Aware Assistant

The combination of a camera that sees the world and a display that can show information supercharges the capabilities of Meta AI. As Zuckerberg stated, this is the first form factor that can “let AI see what you see, hear what you hear”. This leads to a range of powerful use cases:

- Visual Search: A user can look at a historic building and ask, “Hey Meta, what can you tell me about that monument?” and receive contextual information directly on the display.

- Step-by-Step Guidance: The glasses can display instructions for tasks like assembling furniture or following a complex recipe, leaving the user’s hands completely free to perform the task.

- Instant Translation: When traveling, a user can simply look at a menu or a street sign in a foreign language and see the English translation appear in their field of view.

Seamless Communication, Reimagined

The glasses fundamentally change how we interact with messaging and calling platforms.

- Hands-Free Messaging: Users can privately view incoming texts and multimedia messages from WhatsApp, Messenger, and Instagram on the in-lens display. They can then send replies using subtle hand gestures with the Neural Band, all without ever pulling out their phone.

- Point-of-View (POV) Video Calls: This is a standout feature with transformative potential. During a video call on WhatsApp or Messenger, the person on the other end sees the world from the wearer’s perspective through the glasses’ camera, while the wearer sees the caller’s face on their display. This enables powerful applications for remote assistance, virtual tourism, and deeply personal shared experiences.

Navigation and Live Translation

The device offers powerful tools for navigating both physical spaces and linguistic barriers.

- Heads-Up Navigation: The glasses can provide turn-by-turn walking directions, complete with a visual map on the display. This allows users to navigate unfamiliar cities while remaining fully aware of their surroundings, a significant safety and convenience improvement over staring down at a smartphone screen. This feature is set to launch in beta in select cities.

- Breaking Language Barriers: The live captioning feature provides a real-time transcription of conversations, which is not only useful for translation but also serves as a powerful accessibility tool for individuals who are hard-of-hearing. The glasses can display live translations for conversations in English, Spanish, French, and Italian.

Next-Level Content Creation

For social media creators and hobbyists, the glasses offer a streamlined and intuitive workflow.

- The Perfect Shot, Every Time: The in-lens display functions as a real-time camera viewfinder, allowing users to perfectly frame their photos and videos before capturing them.

- Instant Review and Share: After capturing content, it can be immediately reviewed on the glasses’ display and shared to social platforms, eliminating several steps from the traditional phone-based creation process.

IV. The 2025 Meta Smart Glasses Lineup: Choosing Your Pair

To fully appreciate the Meta Ray-Ban Display, it’s essential to understand its place within the broader 2025 smart glasses lineup. Meta has created a clear hierarchy of products, allowing consumers to choose the device that best fits their needs and budget.

Meta’s 2025 AI Glasses Compared

A direct comparison highlights the key differentiators between the three main models announced at Connect 2025.

| Feature | Meta Ray-Ban Display | Ray-Ban Meta (Gen 2) | Oakley Meta Vanguard |

| Price | $799 | Starts at $379 | $499 |

| Target Audience | Tech Early Adopters, Creators | Everyday Lifestyle Users | Athletes, Fitness Enthusiasts |

| Key Feature | In-Lens Display & Neural Band | Upgraded Camera & Battery | Garmin/Strava Integration |

| Display | Yes (600×600 Color HUD) | No | No |

| Control Method | Neural Band, Voice, Touch | Voice, Touch | Voice, Touch |

| Camera | 12MP with 3x Zoom | 12MP, 3K Video | Central 12MP Camera |

| Glasses Battery | Up to 6 hours | Up to 8 hours | Up to 9 hours |

| Total Battery | Up to 30 hours (with case) | Up to 56 hours (with case) | TBD |

| Special Features | POV Video Calls, Visual AI | Conversation Focus, Live Translation | Real-time fitness metrics, Auto-capture |

| Availability | Sept 30, 2025 (US) | Available Now | Oct 21, 2025 |

Export to Sheets

The Upgraded Ray-Ban Meta (Gen 2): The Refined Classic ($379)

For those who want the core smart glasses experience without the premium price tag, the Ray-Ban Meta (Gen 2) is the ideal choice. It builds on the success of the first generation with significant improvements. The battery life has nearly doubled to eight hours of use on a single charge, and the charging case now provides an additional 48 hours of power. The camera has been upgraded to capture video in sharp 3K resolution. New software features, like “conversation focus,” use the microphone array to amplify the voice of the person you are speaking to while filtering out background noise. This model is the practical, polished choice for everyday users.

The Oakley Meta Vanguard: The Connected Athlete ($499)

Meta has also carved out a new niche with the Oakley Meta Vanguard, a device designed specifically for high-intensity sports. Its killer feature is direct integration with Garmin devices and Strava, allowing athletes to ask Meta AI for real-time audio feedback on their heart rate, pace, and other fitness metrics without looking away from the road or trail. A unique “autocapture” feature automatically records short video clips when the user hits key milestones, like distance markers or heart rate thresholds. The glasses feature a rugged, wrap-around design with an IP67 dust and water resistance rating, and their speakers are six decibels louder to cut through wind noise.

V. The Practical Details: Price, Availability, and What You Get

The Meta Ray-Ban Display is positioned as a premium technology product with a corresponding price and a carefully managed rollout strategy.

Pricing and What’s Included

The Meta Ray-Ban Display is priced at $799 USD. This price includes not only the glasses themselves but also the essential Meta Neural Band and the portable, collapsible charging case.

Release Schedule and Availability

The launch is being handled in phases:

- United States Launch: The glasses will be available starting September 30, 2025.

- Initial Purchase Channel: In a strategic move, the glasses will initially be sold exclusively through in-person fittings at a limited number of brick-and-mortar retailers. These include Best Buy, LensCrafters, Sunglass Hut, and official Ray-Ban stores, with select Verizon stores to follow.

- International Expansion: A broader global rollout is planned for early 2026, targeting Canada, France, Italy, and the United Kingdom.

This deliberately high-friction, in-store-only launch process is a strategic decision. The effectiveness of the Neural Band is highly dependent on a proper fit, and a poor initial experience could lead to negative reviews. By requiring an in-store demo and professional fitting, Meta ensures that every early adopter has the device set up correctly, mitigating this risk. This high-touch approach also helps justify the premium price, framing the purchase as a personalized consultation rather than a simple transaction. It allows Meta to control the initial rollout and gather high-quality user feedback before a wider global launch.

Lens and Prescription Options

The glasses come standard with Transitions® lenses, which adapt to changing light conditions, making them suitable for both indoor and outdoor use. For users who require vision correction, prescription lenses are supported within a range of -4 to +4 diopters.

VI. The Bigger Picture: Privacy and the Path to True AR

The launch of a device as capable as the Meta Ray-Ban Display inevitably reignites the complex debate around privacy and the future of wearable technology.

The Privacy Debate Reignited

Smart glasses with integrated cameras inherently raise concerns about the potential for covert recording without consent. Meta’s primary safeguard is a front-facing “capture LED” that illuminates whenever the camera is recording or livestreaming. However, critics and data protection commissions have questioned the effectiveness of this measure, arguing that the light is too small and subtle to serve as an adequate warning to those being recorded, especially when compared to the obvious action of raising a smartphone to film.

Beyond the camera, broader concerns exist regarding Meta’s data collection policies. Reports have highlighted that Meta AI is designed to be always listening for its wake word and that voice recordings are stored, a significant privacy trade-off for the convenience of a hands-free assistant. The ultimate success of these glasses will depend not just on their technical prowess, but on the establishment of new social norms and a collective agreement on the acceptable use of wearable cameras in public spaces—a challenge that the creators of Google Glass were unable to overcome.

A Stepping Stone to the Future of Computing

It is crucial to view the Meta Ray-Ban Display not as an end-product, but as a vital milestone in Meta’s long-term vision for computing. This device is a consumer-facing implementation of technologies being developed for Meta’s true augmented reality glasses, a prototype codenamed “Project Orion”. Orion is expected to feature a much larger holographic display and far more advanced capabilities, but it is still years away from being a consumer product.

The Display glasses, and particularly the Neural Band, are a way for Meta to introduce the public to the core concepts of AR interaction in a more accessible package. They represent a tangible step towards Zuckerberg’s ultimate goal: to create a computing platform based on glasses that will one day move humanity beyond the confines of the smartphone screen.

VII. Final Verdict: Are the Meta Ray-Ban Display Glasses Worth $799?

The Meta Ray-Ban Display is undeniably a landmark product. It successfully blends cutting-edge technology with high fashion in a way that no device has before. However, its high price and first-generation status make it a considered purchase.

Strengths and Weaknesses

The device’s strengths are profound: the in-lens display is crisp, bright, and impressively private; the Meta Neural Band is a genuinely revolutionary control system; and the integration of visual AI enables powerful use cases like POV video calls and heads-up navigation that feel like a true glimpse of the future.

These strengths are balanced by notable trade-offs. The $799 price tag places it firmly in the premium category. The design, while stylish, is bulkier than standard eyewear. The six-hour battery life, while respectable for its capabilities, will require daily charging. And the privacy implications are significant and should not be overlooked by any potential buyer.

Who Should Buy Them?

The decision to purchase depends heavily on the user’s profile:

- For the Tech Futurist and Early Adopter: This is an essential purchase. It offers a tangible, hands-on experience with the next paradigm of personal computing and a chance to be on the bleeding edge of a transformative technology.

- For the Content Creator: The unique capabilities, especially POV video capture and a streamlined workflow, make this a powerful and potentially game-changing tool that could unlock new forms of creative expression.

- For the Average Consumer: This is a fascinating but expensive piece of technology. For most people, it may be wiser to wait for the technology to mature and the price to decrease in future generations. The much more affordable and refined Ray-Ban Meta (Gen 2) offers the core smart glasses experience for less than half the price and is the more practical choice for the majority of users today.

VIII. Frequently Asked Questions (FAQ)

What are Meta Ray-Ban Display glasses? Meta Ray-Ban Display glasses are advanced smart glasses that integrate a high-resolution, full-color display into the right lens. They combine AI, a camera, microphones, and speakers into a stylish Ray-Ban frame, allowing you to view notifications, get navigation, translate text, and more, hands-free.

What can you do with the new display glasses? You can view texts and notifications, capture photos and videos using a 12MP camera, make two-way POV video calls, get walking directions, translate languages in real-time, control music, and interact with the Meta AI visual assistant for information about what you’re seeing.

How does the Meta Neural Band work? The Meta Neural Band is a wristband that uses electromyography (EMG) sensors to detect the tiny electrical signals from your muscle activity. It translates subtle hand and finger gestures—like pinching or swiping—into commands to control the glasses silently and discreetly.

What is the battery life of the glasses and the band? The glasses have up to 6 hours of mixed-use battery life, with the charging case providing up to 30 hours of total use. The Meta Neural Band has an all-day battery life of up to 18 hours on a single charge.

Are the Meta Ray-Ban Display glasses waterproof? The glasses are water-resistant with an IPX4 rating, meaning they can handle splashes but should not be submerged in water. The charging case is not water-resistant.

Can I get prescription lenses for them? Yes, prescription lenses are available and can accommodate a range from -4.00 to +4.00.

How much do the Meta Ray-Ban Display glasses cost? The glasses start at $799 USD, which includes the Meta Neural Band and the charging case.

Where and when can I buy them? They will be available in the US starting September 30, 2025, exclusively at select physical retailers like Best Buy, LensCrafters, and Ray-Ban stores for an in-person fitting. A global release in Canada, the UK, France, and Italy is planned for early 2026.

What do I need to use the glasses? To use the glasses, you need a compatible smartphone (Android 10+ or iOS 14.4+), the Meta AI app, a valid Meta account, and a wireless internet connection for most features.

How are these different from the regular Ray-Ban Meta glasses? The key difference is the integrated in-lens display and the Meta Neural Band for gesture control. The regular Ray-Ban Meta (Gen 2) glasses have a camera, microphones, and speakers for capturing content and using an audio-only AI assistant, but they do not have a visual display or gesture-based wristband controls.

Meta :